Dear friends,

I am working on the coupling of CROCO with WW3. To do so, I am first trying to run the Benguela coupled test case; I am following the methodology exposed in the last CROCO summer course >> http://mosa.dgeo.udec.cl/CROCO2024/CursoAvanzado/Day3_morning.pdf

Each model I have run separately successfully. For the coupling, I have configured my switch considering:

F90 NOGRB TRKNC NC4 DIST MPI PR3 UQ FLX0 LN1 ST4 STAB0 NL1 BT4 DB1 MLIM TR0 BS0 BS0 IC0 IS0 REF1 XX0 WNT0 WNX1 RWND CRT0 CRX1 COU OASIS OASOCM O0 O1 O2 O2 O2a O2b O2c O3 O4 O5 O6 O7; also in cppdefs I have defined OW_COUPLING and MRL_WCI.

I have checked the dates of the input files for both CROCO and WW3, although they have different formats (hours and days), and different start and end dates, they all cover the month of January 2005.

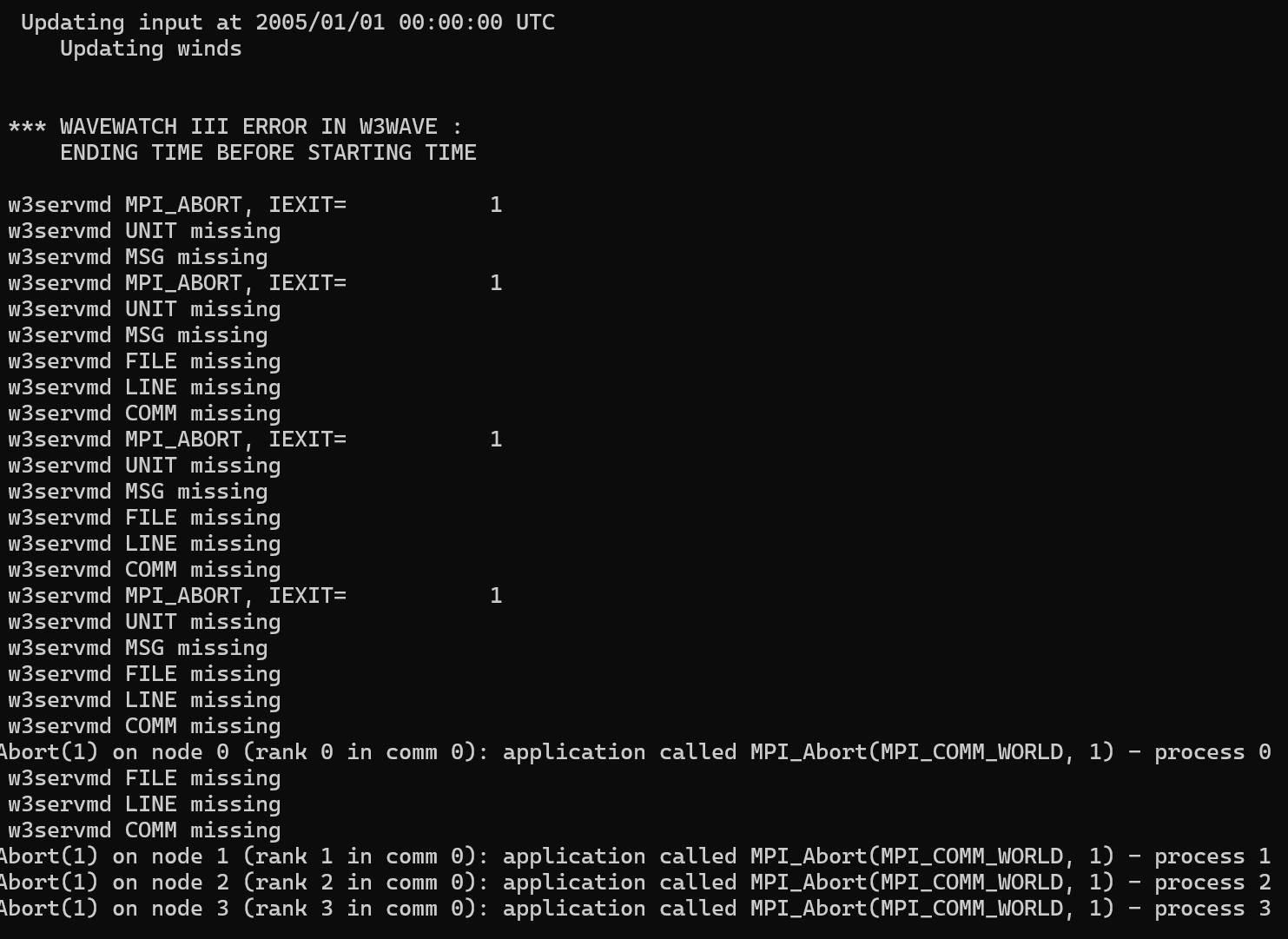

When running the model in cluster, I am getting the following error:

You can access the run files I am using at the link below. The error is save in the file “job_1226.out”. Does anyone have any idea what the cause of the error is?

Please let me know if additional information is needed.

Thank you for your support and kind attention.

Regards,

Cesar.